Created: August 02, 2021

Modified: August 06, 2021

Modified: August 06, 2021

do-calculus

This page is from my personal notes, and has not been specifically reviewed for public consumption. It might be incomplete, wrong, outdated, or stupid. Caveat lector.- References:

- A causal graph contains variables connected by directed edges, indicating causal effects. It is inherently a model, indicating assumptions about the existence and direction of causal relationships. If we also assume specific conditional distributions (and/or deterministic functions) for the edges, then we have a structural equation model.

- A structural equation model is usually specified in terms of functions that take random inputs, e.g., . This allows us to talk about counterfactual: questions of the form 'given that (X, Y) happened, what would have happened if (X', Y) had happened instead?'

- Interpreted as a directed graphical model, the causal graph defines a joint distribution on latent and observed variables (say these are ); in particular, it defines a marginal joint distribution on the observed variables (, say). This is the distribution from which we derive conditionals like .

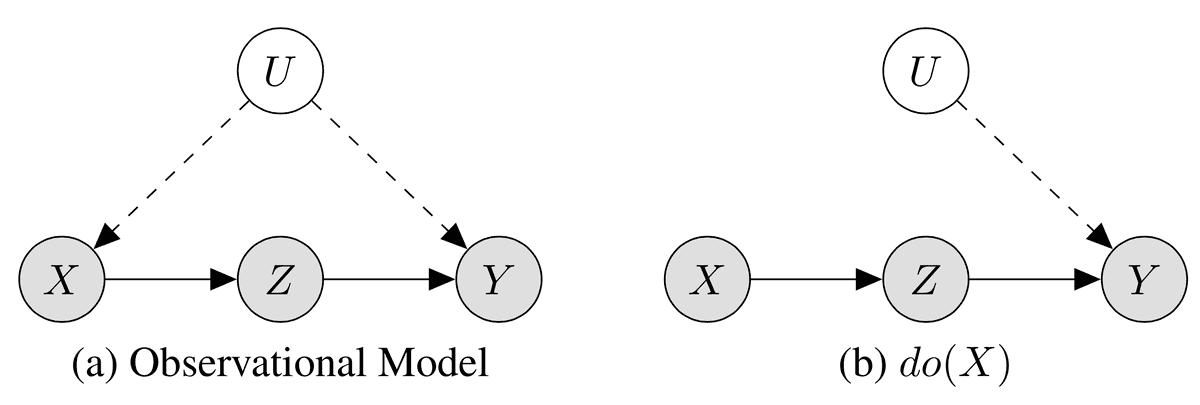

- We model an intervention on the causal graph by setting the value of the corresponding node and deleting all incoming edges. This gives a new joint distribution, and a new marginal joint distribution on observables. Associations derived from this joint distribution have the form , or e.g. we might have quantities like .

- In general, given a graph , let denote the mutilated graph in which we've deleted all incoming edges to X.

- Also let denote the graph in which we've deleted all edges out of X. Note that this is a valid operation on the graph itself, even though we can't in general derive a joint distribution corresponding to the new graph.

- Note that the intervention joint distribution might have different conditional independence relationships than the original joint.

- Of course, we don't know the intervention joint. Our goal is to connect the quantity we care about under that joint, , with some quantity that we can estimate from observations of the original joint. For example, if our causal assumption is that (and there are no other variables), then . On the other hand, if we assume , then we have because the intervention breaks the dependence.

- In general, do-calculus is the set of rules by which we try to derive observable quantities (like or ) from quantities that include an intervention (like . The three rules are:

- Ignoring observations: if in the mutilated graph .

- This just says that the standard rules of conditioning apply in the mutilated graph.

- Action / observation exchange (aka the backdoor criterion): if in the graph , where we've removed the edges into and the edges out of .

- In words: it doesn't matter whether you intervene or condition on , if the only dependence between y and z is via a causal chain(s) from z to y. (i.e., if there is no 'back door' latent variable that affects both y and z).

- Ignoring actions / interventions: if in the graph , where denotes the set of nodes in that are not ancestors of .

- In words: intervening on has no effect on , if is known/fixed and the only dependence between y and z was through a path that is blocked by .

- Ignoring observations: if in the mutilated graph .

- These are not known to be complete for deriving all causal effects. But I don't think they're known not to be, either.

- Example: smoking and lung cancer. Let X indicate smoking, Y indicate tar buildup in the lungs, and Z indicate lung cancer. Suppose that these are observed, but we also hypothesize a hidden confounder U (perhaps genetic or societal factors):

- We are interested in the effect . In the mutilated graph on the right, we can write this conditional as . Note that we need to keep the conditioning, even where we could otherwise drop conditioning on , in order to indicate that we are still in the mutilated graph.

- By the backdoor criterion, we have .

- By rule 3, we also have .

- In order to get an estimate in terms of real-world quantities, we'd need to sum out the . To do this, we need: . (this is not the shortest derivation, but I did make it work).

- Therefore we have . This formula turns out to be known as the front-door adjustment

- TODO finish this: sources Introduction to Causal Calculus (ubc.ca) Lies, Damned Lies, and Causal Inference | Keyon Vafa