Modified: September 06, 2022

infinitesimal

This page is from my personal notes, and has not been specifically reviewed for public consumption. It might be incomplete, wrong, outdated, or stupid. Caveat lector.The Leibniz calculus notation using infinitestimal quantities like or is simultaneously

- Very sensible and intuitive, but also

- Constantly confusing to me.

A lot of this is probably specific to my own idiosyncratic understanding, but in any case this note is an attempt to work through points that have confused me.

Discrete time. I like that infinitesimals connect intuitively to the finite-sum case where we're summing over some change at each timestep. But when considering discrete 'timesteps' we often implicitly take , which can make it ambiguous which of and we actually mean. In general, if a change is 'per timestep', it should be written as (or similar) to emphasize that two timesteps would give twice as much change, half a timestep half as much, etc.

Dependence. if is a function of time, then its derivative is also a function of time. But what about and individually? Are these also functions of time? If so, should we not write them with this explicit dependence?

Let's start with . Ultimately we can think of whatever derivative or integral we're evaluating as the limit of a ratio or sum as some 'width' variable goes to zero. In principle we could define as nonuniform in , describing e.g. a sum in which the 'width' of the time buckets is larger in some parts of the domain than in others. But usually it makes sense to identify with , i.e., consider the limit of all timesteps becoming infinitesimally small in a uniform way, and then has no time dependence.

On the other hand, is a function of time, and any small change in time will induce a corresponding change . This quantity is absolutely a function of time. It is also, implicitly, a function of , in that a larger input change will lead to larger output change , but more properly we can think of both of these as being some function of the underlying .

Partial differentials. How do and relate to each other? Do they have the same units? Does , does , or is there a clear principle for why neither of these can occur in a valid expression?

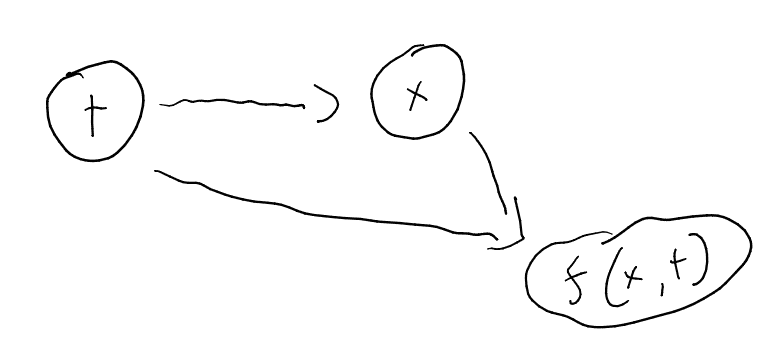

I think this starts to implicate a computational graph view of calculus. For concreteness of illustration, let , and consider some function . The full picture here looks like

in which everything is downstream of , which functions as an exogenous or 'input' variable. In this picture, the total derivative is a question about the graph as a whole: how will the value at the output node change in response to a change at the input node ? The partial derivative is a local question about the final node: how will the value of that node change if we vary the second argument while holding the first argument constant? We can relate these using the law of total differentiation:

in which everything is downstream of , which functions as an exogenous or 'input' variable. In this picture, the total derivative is a question about the graph as a whole: how will the value at the output node change in response to a change at the input node ? The partial derivative is a local question about the final node: how will the value of that node change if we vary the second argument while holding the first argument constant? We can relate these using the law of total differentiation:

This is in terms of ratios of infinitesimals, where a is always in a ratio with another (and similarly for ) so the 'units' always cancel out. In general it's not valid to cancel across infinitesimal units, e.g. we can't simplify and it's not clear what it would mean if we could.

I think the right way to think about this is that global/total differentials can generally be treated within a single global limit: for some perturbation to the input of the computation graph, the perturbations and fall out naturally, so there is a consistent process relating all of these quantities, in some sense a single coherent thought experiment, which allows us to meaningfully ask about their ratios.

By contrast, the partial derivative is asking about a modified copy of the graph where we freeze all inputs to except for .This is vaguely reminiscent to the graph surgery prescribed by do-calculus for causal inference, but only vaguely. In this graph, and are the only changes that are even defined; the indicates units corresponding to this particular thought experiment, so the only way to 'leave the thought experiment' and get an objectively meaningful quantity is to consider the ratio or its inverse. There is no meaningful way for partial differentials to interact with quantities in a different thought experiment.

The view of total differentials as a single coherent thought experiment is complicated when we have multiple independent variables. Suppose we we had both and as exogenous inputs, took as a function of both of them, and then asked about ? I guess derivatives wrt have to be interpreted in terms of a limiting process as goes to zero. And separately if we're computing derivatives with respect to , there is a limiting process as goes to zero. But these are separate processes, not tied to any common rate. To put a point on it: what is in this experiment? It's not a well-defined quantity because neither nor is a function of the other; we have total freedom in the relative rate at which those differentials go to zero. So when we're asking about this must be a different from that in : the first exists in a thought experiment where we've changed while leaving unchanged, and the second in an opposite thought experiment. TODO resolve this.

Differential operators. Why can we write but not ?

Higher-order derivatives. Why is the second derivative written as and what do the individual terms of these mean?

It might help me to work through Wikipedia's description of differentials as linear maps. For a function , we define the differential . A multidimensional function has differential

Here the differential is only defined on functions; we finesse this by treating and as functions on a 'standard infinitesimal' , so that and ? I'm confused by the 'standard infinitesimal' setup because it seems like it implies a dependence between and that doesn't necessarily exist.